Topic

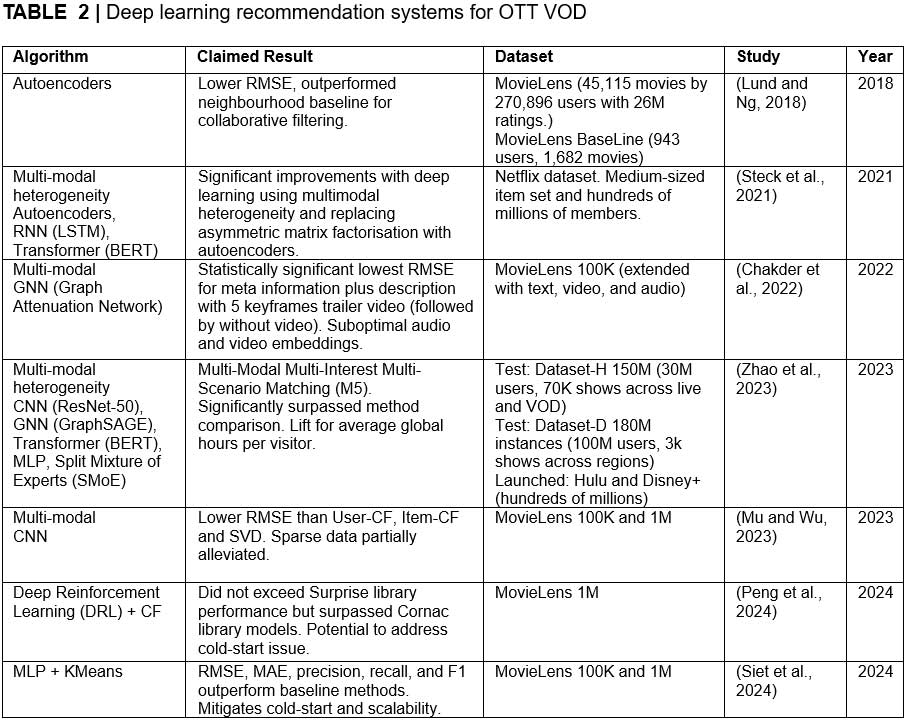

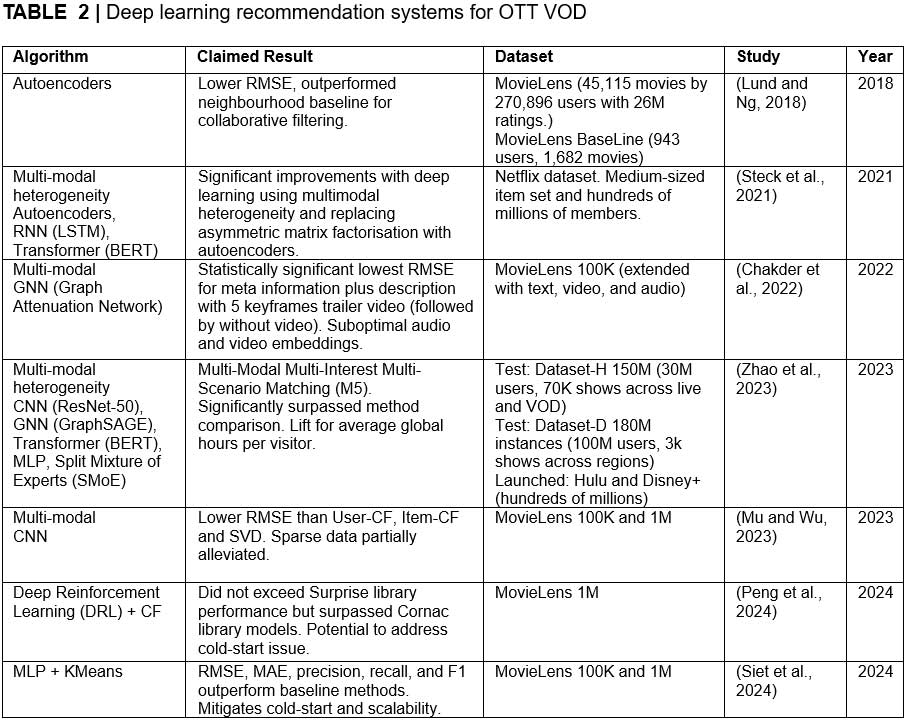

Implementing deep learning techniques in media and entertainment recommendation systems.

Outcomes

What (2)

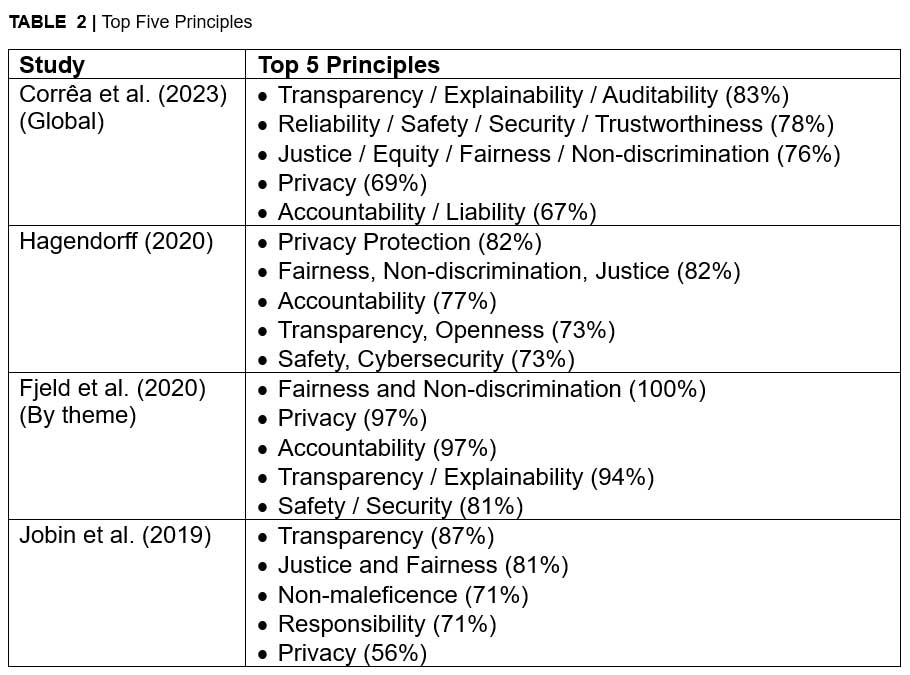

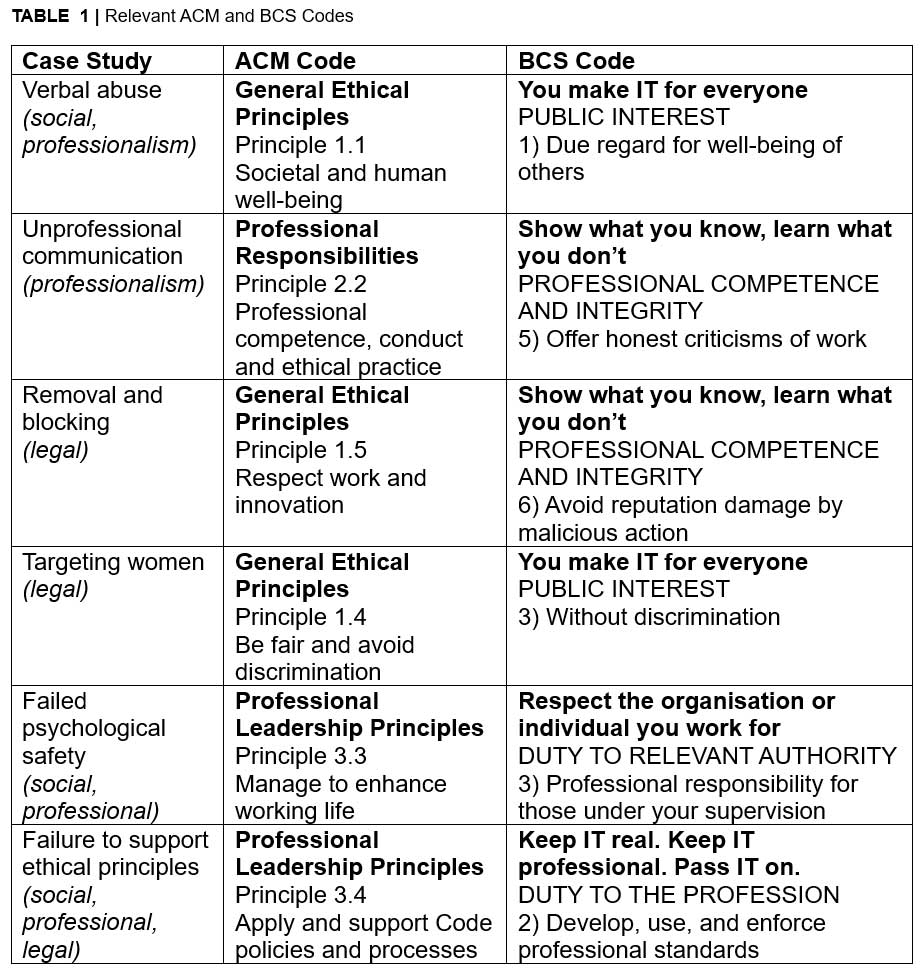

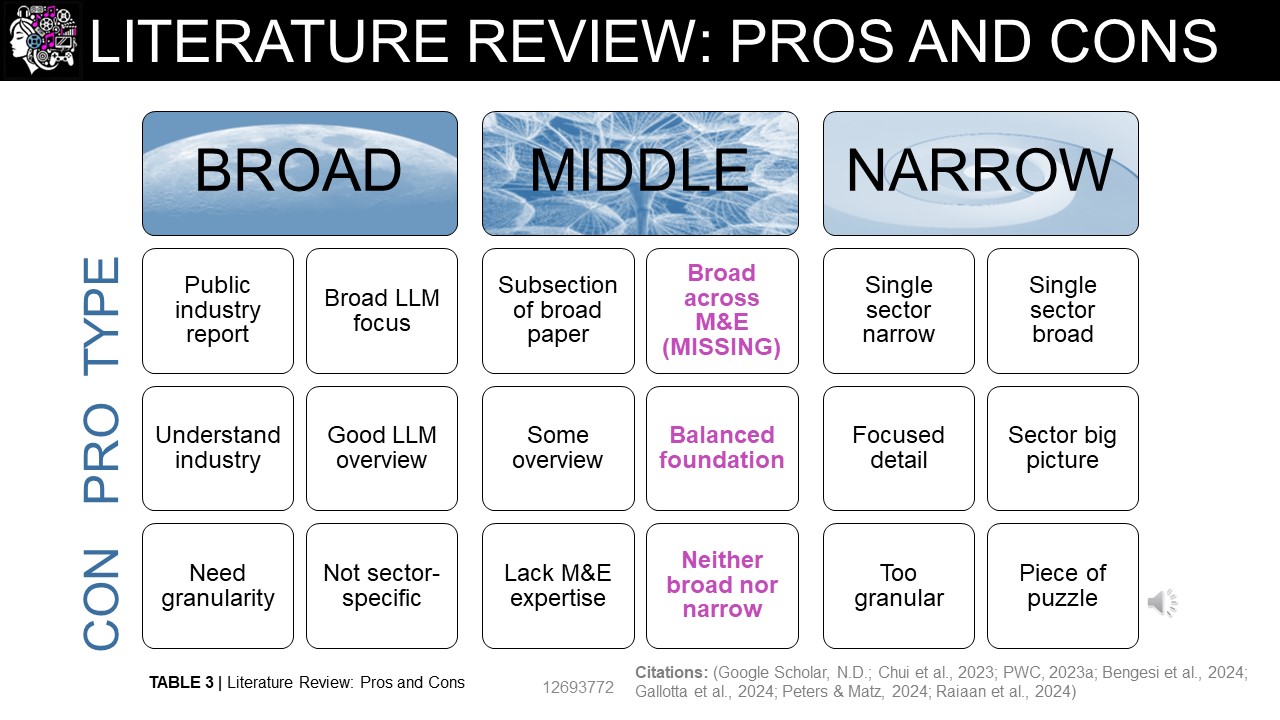

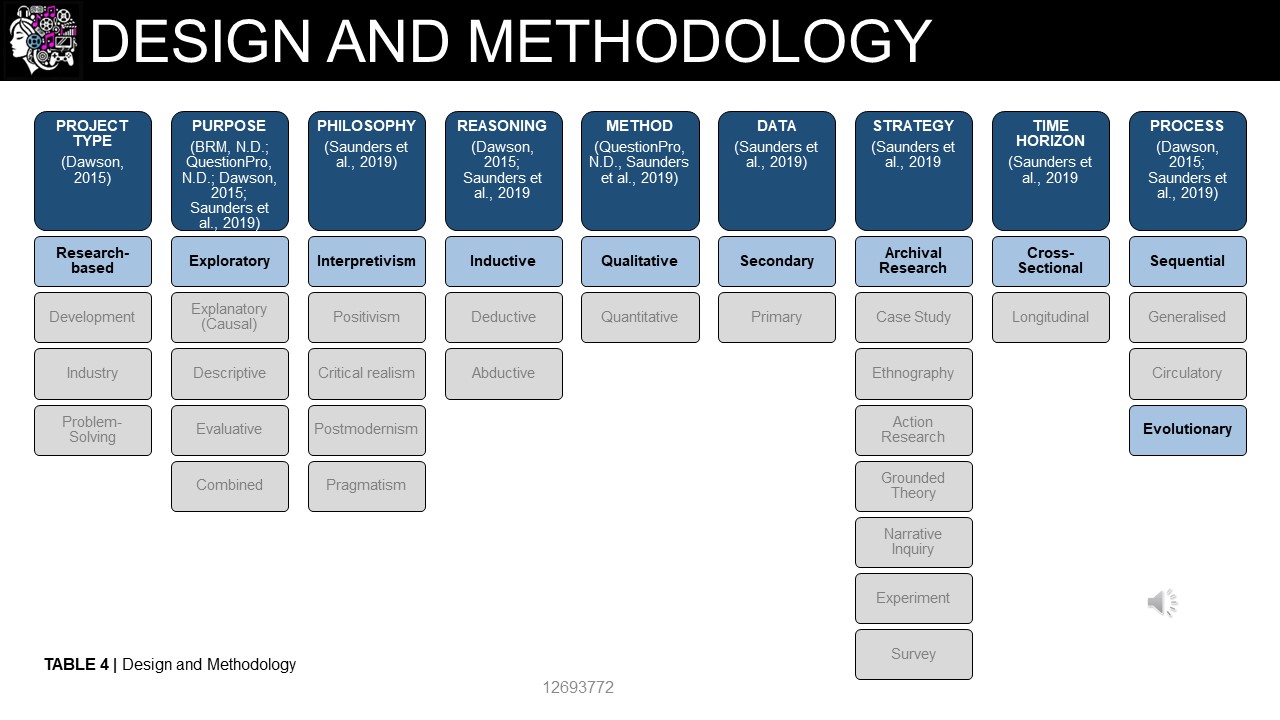

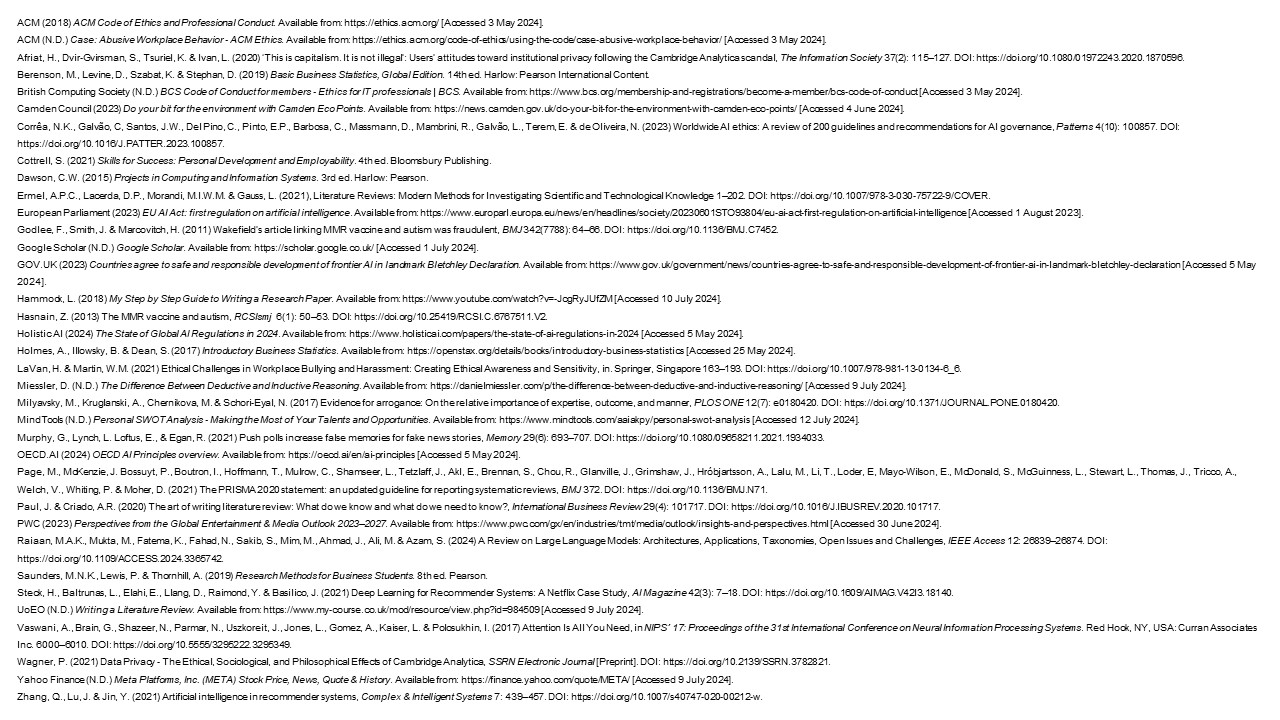

While search strategy guidance was practical, writing guidance felt philosophical (UoEO, N.D.; Ermel et al., 2021). Therefore, I used Dawson's (2015) outline: literature overview, critical evaluation, wider context and gap identification. Although media is my industry, deep learning was new to me. Academic sources like Zhang et al. (2021) provided technical overviews, whereas industry sources like Netflix's Steck et al. (2021) delivered practical application.

So What (3)

Restricting Google Scholar (N.D.) search terms focused analysis while exploring both industry and academic perspectives. Discovering Hammock's (2018) table use simplified analysis and presentation, while graphical representation clarified relationships. However, Dawson (2015) separated out critical evaluation, so feedback requesting criticality throughout was confusing.

Now What

Feedback taught me to avoid bullet points and add more critical analysis. Additionally, I find contrasting academia and industry valuable and will continue this practice.

Feedback

80% (Distinction). 'The level of knowledge provided is outstanding'. 'Outstanding structure and presentation', including visuals. However, add more context to beginning, include 'critical pros and cons' throughout, and avoid bullet points.